Should politicians have to pass mandatory fact-checking during campaigns? Short answer, yes in some form, if it protects voters, but only if it stays fair and transparent.

Fact-checking means checking claims against trusted data, expert sources, and public records. It matters now because social media, short videos, and rapid news cycles push claims faster than most people can verify. For students and first-time voters, clear, accurate information helps you choose leaders with confidence.

The case for yes, it curbs false claims, sets shared standards, and rewards honest campaigning. It can reduce confusion, support media literacy, and make debates about policy, not spin.

The case for no, it could be biased if run poorly, slow urgent statements, or chill free speech. Politicians might game the system, and bad actors could still spread falsehoods outside official checks.

This post will compare both sides, share real-world examples from recent campaigns, and offer a simple student action plan for smart checking. You will learn how to spot weak claims, cross-check sources fast, and avoid common traps.

Table of Contents

- The Case Against Mandatory Fact-Checking: Free Speech, Bias, and Cost

- How Mandatory Fact-Checking Could Work: Rules, Tools, and Oversight

- What Can Students Do Now: A Simple Fact-Checking Toolkit

- Conclusion

The Case Against Mandatory Fact-Checking: Free Speech, Bias, and Cost

Mandatory checks sound tidy on paper. In real campaigns, rules can reshape how politicians speak, how the media covers claims, and how voters react. The case against compulsion is not a defence of lying. It is a warning about how rules can chill honest debate, bend under bias, and slow the flow of timely information in an election sprint.

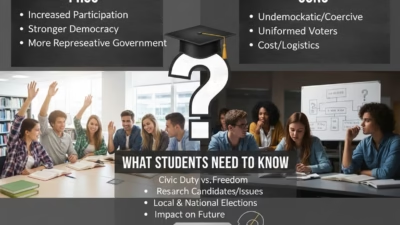

Free expression and healthy debate

Supporters of strict checks argue that vetting cleans up lies. That is fair. But speech in politics is not just about facts, it is also about values, forecasts, and priorities. You can fact-check a number, not a promise. If rules are heavy-handed, politicians may avoid bold ideas in case a claim gets flagged. That can shrink the range of views students hear.

- Chilling effect: If every sentence risks a label, speakers default to safe lines. New voices, smaller parties, and student candidates often pull back first.

- Ideas vs facts: Some statements are contestable, not false. Example: “This policy will boost growth.” That is a prediction, not a fact. Turning forecasts into pass-or-fail can mute useful debate.

- Self-censorship: Campaign staff may cut detail to dodge scrutiny. Shorter, vaguer messages follow, which helps spin, not depth.

The counterpoint is strong. Clear rules can target verifiable claims, like costs, crime rates, or voting records, while leaving room for argument on values. Smart design matters. If checks focus on data points that are public and comparable, you protect debate while curbing fabrications.

Who watches the watchers, risk of bias

Fact-checkers must be independent, consistent, and transparent. Without that, even strong methods look suspect. Bias can be real or perceived. In politics, perception often matters more than statistics.

- Method transparency: Publish criteria, datasets, timeframes, and thresholds. Show how ratings are decided and who signs off.

- Balanced sampling: If teams only check high-profile figures from one side, trust collapses. Balanced selection by topic and party is key.

- Appeals and corrections: A rapid, fair appeal process reduces claims of unfairness. Time-stamped updates show integrity when evidence changes.

Critics worry that staffing, funding, and editorial choices can tilt outcomes. If students see more labels on one camp, they may assume the referee is biased, even when methods are solid. On the flip side, standardised rubrics, blinded review, and published audits can lift trust. The lesson is simple, the checker’s process must be as checkable as the claims.

Speed, scale, and cost during elections

Elections are fire hoses. Posts, leaflets, doorstep scripts, TV debates, podcasts, and late-night clips flood feeds. Mandatory checks must keep pace or they become a bottleneck.

- Speed: A false claim can go viral in minutes. A thorough check can take hours or days. Delays risk two harms, letting the claim spread and creating a perception that checks are used to stall opponents.

- Scale: National campaigns output thousands of claims, many repeated across formats. Triaging what to check first becomes political by default.

- Cost: You need trained researchers, editors, legal advice, data tools, and an appeals team. Add AI detection and media monitoring software, plus server costs. The bill rises fast.

A simple table helps sum up the trade-offs:

| Challenge | What it looks like in practice | Risk if mishandled |

|---|---|---|

| Speed | Live debate fact-checks vs detailed reviews | Late labels that feel moot or tactical |

| Scale | Thousands of claims across platforms | Missed checks on key issues |

| Cost | Staff, tech, legal, appeals | Uneven coverage, burnout, lower quality |

Proponents argue that AI can flag duplicates and surface likely repeats, cutting workload. That helps, but AI still needs human review to avoid false positives. If the system lags, campaigns can claim censorship or incompetence. If it rushes, errors creep in and trust drops.

Unintended effects and backlash

Fact-check labels do not always fix the problem. Sometimes they make it worse.

- The spotlight effect: Labels can boost attention to a false claim. People who missed the original post now see the controversy and share it again.

- Echo chambers harden: Supporters may treat checks as attacks, which boosts in-group loyalty. A label becomes a badge rather than a warning.

- Gaming the refs: Savvy campaigns may issue borderline claims to trigger a label, then shout bias to earn free coverage. The row becomes the story.

Here is the debate in plain terms:

- Against mandates: Heavy rules risk censorship by process. They can amplify falsehoods, drain resources, and spark tribal backlash.

- For mandates, with limits: Narrow the scope to verifiable data, timebox decisions, publish methods, and require fast right of reply. Focus on high-impact claims that affect votes, such as costs, health, benefits, or taxes.

A practical middle path can help students and first-time voters:

- Prioritise material claims about money, rights, and safety.

- Flag uncertainty when data is mixed, rather than forcing a verdict.

- Show your working with sources and timestamps.

- Invite counters with clear, speedy appeals.

The core question is not whether truth matters. It is how to protect open debate while stopping outright fabrications. If rules chill speech, fuel bias claims, and slow voters’ access to information, they fail. If checks stay targeted, transparent, and quick, they can help without dulling the debate students deserve.

How Mandatory Fact-Checking Could Work: Rules, Tools, and Oversight

Rules only help if they are clear, simple, and fair. A workable model for mandatory checks focuses on claims you can measure, a transparent method, smart use of tech, and light-touch penalties. It should protect voters without freezing debate, and it must allow fast corrections during campaigns.

Clear scope: which claims, which channels, and light penalties

Set the bar where numbers and records live. That means verifiable, measurable claims, not opinions or predictions.

- Covered claims: statistics, costs, turnout figures, crime rates, NHS wait times, emissions targets, tax thresholds, voting records.

- Out of scope: values, promises, forecasts, slogans, sarcasm, and satire.

- Channels: official party ads, paid social ads, party-owned posts and emails, candidate speeches and leaflets, televised or streamed campaign statements.

Why it helps students: it focuses on claims that shape budgets and rights. Why some push back: edge cases will appear, like partial estimates or early data that later changes.

Use proportionate, light penalties that fix the record rather than punish speech.

- Required corrections within 24 to 48 hours, pinned for visibility.

- Context labels with links to sources.

- Temporary ad pauses on repeat falsehoods until corrected.

- Transparency notices for campaigns that miss deadlines.

Supporters say these measures nudge honesty without chilling debate. Critics worry labels can backfire by drawing attention to borderline claims. The middle line is to target material claims, keep penalties proportionate, and focus on clear fixes over blame.

Transparent methods and a right to appeal

Trust comes from process, not just verdicts. Make the method public, repeatable, and easy to challenge.

- Open sources: point to public datasets, official reports, and peer-reviewed studies. Share timeframes, definitions, and cut-off dates.

- Plain rubrics: define ratings like Accurate, Needs Context, Misleading, or False. Keep the scale small and consistent.

- Rapid right to reply: a clear, two-step appeal that fits election speed. For example, a 24-hour informal review, then a 48-hour panel decision.

Independent oversight matters. Aim for a board that mixes data analysts, legal scholars, journalists, social scientists, and citizen representatives. Rotate membership, publish conflicts of interest, and release quarterly audits.

A quick snapshot of benefits and risks:

| Method element | Benefit for voters | Risk if weak |

|---|---|---|

| Open sources | Anyone can check | Selective sourcing fuels bias claims |

| Clear rubrics | Consistent calls | Hair-splitting labels confuse |

| Fast appeals | Fairness under pressure | Delays look tactical |

| Mixed oversight | Balance and credibility | Tokenism if voices lack real power |

Proponents like the sunlight this model creates. Critics worry about performative transparency, where the paperwork looks tidy but choices still tilt. The fix is to publish every decision, every correction, and every appeal outcome with timestamps.

Use tech wisely, keep humans in charge

AI can speed the pipeline, but it should not deliver the verdicts. Use it as a triage tool, not a referee.

- What AI can do well: detect duplicate claims, match statements to public data tables, cluster similar posts, and flag likely exaggerations by spotting out-of-range numbers.

- Where humans must lead: interpreting context, weighing uncertainty, judging material impact, and deciding final labels.

Build safeguards into the workflow:

- Audit trails: keep a full log of sources checked, queries run, and who signed off.

- Source links: every label should link to datasets and archived copies.

- Regular reviews: schedule weekly sampling to check error rates and adjust thresholds.

- Diverse review panels: rotate reviewers across parties and topics to spread judgement calls.

Supporters say AI cuts noise and frees people for hard cases. Critics warn about bias in training data and false positives. The balance is simple, machines sort and surface, people decide, and the whole pipeline is open to scrutiny.

Education first: pair checks with media literacy

Fact-checking works best when citizens can spot red flags themselves. Build public dashboards that track claims, show sources, and highlight corrections in one place. Add classroom guides that teach students to read charts, compare baselines, and check for cherry-picked timeframes.

Practical tools that help:

- Short video explainers on how a claim was verified.

- Simple checklists for spotting dodgy stats, like moving baselines or selective start dates.

- Shareable graphics that compare a politician’s claim to the nearest official figure.

Advocates argue this reduces reliance on heavy rules over time because voters get quicker at sifting truth from hype. Critics note that not everyone will use the tools, and attention spans are short. Keep it bite-sized, visual, and timely. The long game is a student voter base that needs fewer labels because they can test claims on the fly.

What Can Students Do Now: A Simple Fact-Checking Toolkit

You do not need a newsroom to check claims. A few steady habits can stop you sharing weak or misleading posts. Think of this as a pocket toolkit you can use before you tap share. Supporters of stricter checks say students should be cautious and verify first. Critics worry that over-checking slows conversation. The balance is simple, move fast, but pause long enough to be fair to the facts.

A 60-second pre-share checklist

Before a link or clip leaves your feed, use this quick sweep. It is fast, practical, and saves future headaches.

- Read past the headline. Headlines sell. The article body tells. Scan for the key claim, the method, and the counterpoint.

- Check the date. Old stories resurface as if they are new. Ask, is this still true today?

- Find the original source. Look for a named report, dataset, or transcript. If the post does not link out, search for the quote in

""and add a source likesite:gov.uk. - See if more than one trusted outlet reports it. Two or three independent reports beat one viral thread. Aim for outlets with clear corrections pages.

- Pause before you share. Ask yourself, would you be happy to defend this in a tutorial? If not, save it and return later.

Supporters of caution say this stops cheap clicks from shaping votes. Critics warn it can feel slow in group chats. A compromise helps, save the post, drop a note saying you are checking, then return with sources.

Pro tip examples:

- “Claim: ‘Unemployment doubled last year.’ Source check: ONS data, last twelve months.”

- “Quote search: paste the line into Google with quotes, add politician’s name, look for the full transcript.”

Spot red flags and find better sources

Weak claims usually share the same look. Train your eyes to catch the pattern, then swap to stronger proof.

Common red flags:

- No author or bio: hidden bylines and vague site names.

- No data or broken links: claims with no table, no link, or links that 404.

- Cropped images or videos: tight frames hide context. Reverse image search if unsure.

- Urgent, emotional language: “disaster,” “exposed,” “they do not want you to know.” Heat often hides light.

- Cherry-picked timeframes: a graph that starts or ends on a spike.

- Anonymous “insider” quotes: lots of drama, no trail.

Better sources to cross-check:

- Official statistics: ONS, NHS, Electoral Commission, IMF, World Bank. Look for clear methods and date stamps.

- Reputable outlets: BBC, FT, Reuters, AP. Read the corrections policy.

- Non-partisan fact-check groups: Full Fact, FactCheck.org, AFP Fact Check, PolitiFact. Check how they rate claims and what evidence they use.

- Primary documents: budgets, voting records, committee reports. When in doubt, read the PDF.

Why this helps: strong sources survive scrutiny, weak posts fall apart when you tug the first thread. Supporters say this builds media muscle. Critics note that official data can lag. Fair point, so check the latest release date and note if figures are provisional.

Simple test you can run:

- “What is measured?”

- “What is the timeframe?”

- “What is the source link?”

- “Is there a credible counter-source?”

Debate with respect and stay curious

Politics needs heat and light, not heat alone. Keep discussions sharp on facts, soft on people. Ask for sources, listen, and adjust when evidence is strong. Supporters of polite debate say good tone keeps friends talking. Critics worry it can blunt urgent pushback. You can do both, be firm on evidence and kind in voice.

Useful moves:

- Ask for receipts: “Happy to read a link if you have one.”

- Slow the pace: “Let’s check numbers before we go further.”

- Acknowledge good points: “Fair, that part was accurate.”

- Update publicly: “I got this wrong. Here is the fix.”

Sentence starters that keep the room calm:

- “Can you share the source for that claim?”

- “I have a different figure from [source]. How do you read it?”

- “What timeframe are you using for that stat?”

- “I might be missing context. Where did that quote come from?”

- “If the latest data says X, would you change your view?”

- “I used to think Y, but this report shifted me because…”

Balancing both sides:

- Supporters of strict verification say always wait for sources before responding.

- Critics say that can stall real-time chat and blunt activism.

- Middle path, ask for a source while outlining your current evidence, then circle back with an edit if needed.

Key takeaway: curiosity beats certainty. Facts are a moving target in campaigns, but your habits can stay steady. Read, check, compare, and share with care.

Conclusion

For mandatory fact-checking, the gains are clear, fewer false claims, sharper debates, and more trust. It sets shared standards, highlights evidence, and rewards accuracy. Against it, there are real risks, chilled speech, skewed checks, and slow corrections when time matters. Values, forecasts, and trade-offs need space, facts need proof, and both should breathe.

A sensible middle path blends light, clear rules with strong education and open data. Focus on material claims, show your working, and allow fast appeals. That balances fairness with free speech, and helps students judge politicians on evidence, not noise. The aim is not perfection, it is integrity in the rush of a campaign.

Quick policy checklist:

- Narrow scope to verifiable, high-impact claims.

- Publish sources, methods, and timeframes.

- Use simple ratings and time-boxed corrections.

- Provide a rapid, fair right to reply.

- Independent oversight with public audits.

- Pair checks with media literacy and open datasets.

Put this into practice now. Keep using smart checks, share sources, and vote with confidence.